Introduction

Artificial intelligence (AI) is no longer a futuristic concept — it’s a practical tool transforming how we build and use web applications. From personalized recommendations on e-commerce sites to intelligent chatbots and fraud detection systems, AI models are now core to modern user experiences.

But for many developers, the challenge isn’t building an AI model — it’s integrating that model into a real-world web application so it delivers value at scale.

This guide explores the fundamentals of integrating AI models into web applications, including use cases, integration strategies, and the tools developers need to deploy AI seamlessly.

Why Integrate AI into Web Applications?

- Enhanced User Experience

- Personalization, recommendations, and smarter search.

- Automation of Repetitive Tasks

- Document classification, image tagging, sentiment analysis.

- Decision Support

- AI-powered dashboards for predictive analytics.

- Competitive Differentiation

- AI features like chatbots, auto-complete, or voice interfaces can make apps stand out.

- Revenue Growth

- Smarter product recommendations and fraud prevention drive ROI.

Common Use Cases of AI in Web Applications

- Natural Language Processing (NLP): Chatbots, text summarization, sentiment analysis.

- Computer Vision (CV): Image recognition, product tagging, face detection.

- Recommendation Engines: Personalized shopping or content suggestions.

- Predictive Analytics: Forecasting sales, demand, or user churn.

- Fraud Detection & Security: Anomaly detection in financial transactions.

- Voice Interfaces: Speech-to-text or conversational AI for web apps.

Types of AI Models for Web Integration

- Pre-Trained Models

- Hosted by cloud providers (OpenAI, Hugging Face, Google AI).

- Great for quick integration with APIs.

- Custom Trained Models

- Built in TensorFlow, PyTorch, or Scikit-learn.

- Requires deployment on a server or container.

- Hybrid Approaches

- Use pre-trained models as a baseline and fine-tune them on domain-specific data.

Integration Approaches

1. API Integration (Most Common)

- Hosted AI models are accessible via REST or GraphQL APIs.

- Example: Using OpenAI’s API to add natural language features.

- Pros: Quick, scalable, minimal setup.

- Cons: Vendor lock-in, recurring costs, latency concerns.

2. SDKs and Libraries

- Use official SDKs (TensorFlow.js, PyTorch Mobile, Hugging Face Transformers).

- Pros: Local integration, reduced latency.

- Cons: More setup, requires compute on your side.

3. On-Premise or Cloud Deployment of Custom Models

- Deploy models using Docker containers, Kubernetes, or cloud ML services.

- Example: Deploying a TensorFlow model on AWS SageMaker or Google AI Platform.

- Pros: Full control, customization, data privacy.

- Cons: Requires MLops infrastructure and monitoring.

4. Edge Deployment (For Web + IoT)

- Run models on user devices with TensorFlow.js or ONNX.js.

- Ideal for lightweight inference without server dependency.

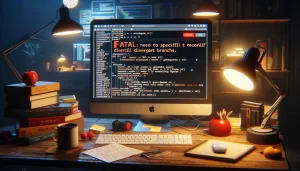

Example: Simple AI Integration with Flask

from flask import Flask, request, jsonify

import joblib

app = Flask(__name__)

model = joblib.load("sentiment_model.pkl")

@app.route('/predict', methods=['POST'])

def predict():

data = request.get_json()

prediction = model.predict([data['text']])

return jsonify({"sentiment": prediction[0]})

if __name__ == '__main__':

app.run(debug=True)

Here, a sentiment analysis model is integrated into a Flask web app. Clients send text input via POST requests, and the app returns a prediction.

Step-by-Step Workflow for AI Integration

Step 1: Define the Problem Clearly

- Identify what you want AI to do: classify, recommend, detect, or predict.

- Example: “Classify customer support tickets by priority.”

Step 2: Select the Right Model

- Pre-trained API (e.g., OpenAI, Google Vision).

- Fine-tuned model (e.g., Hugging Face Transformer).

- Custom-built ML model trained on domain-specific data.

Step 3: Prepare the Infrastructure

- Decide on hosting: cloud, on-premise, or edge.

- Use containers (Docker) for portability.

- If scaling is a priority, consider Kubernetes or serverless platforms.

Step 4: Build the API Layer

Wrap your AI model in an API (Flask, FastAPI, Django, Express). This enables your web app to make requests like:

POST /predict

{ "input": "This product is amazing!" }

Step 5: Integrate with Frontend

- Use AJAX/Fetch API for real-time predictions.

- For heavy inference, show loading states.

- Example: Sentiment analysis results shown instantly on a review submission form.

Step 6: Deploy and Monitor

- Deploy via cloud providers (AWS SageMaker, GCP AI Platform, Azure ML).

- Set up logging, performance monitoring, and error handling.

- Track metrics like latency, accuracy, and throughput.

Best Practices for AI Integration

- Keep Models Lightweight for Web

Large models slow response times. Optimize with pruning, quantization, or distillation. - Focus on Latency

Users expect responses within 1–2 seconds. Cache results for repeat queries. - Use Scalable Infrastructure

Auto-scale inference servers to handle traffic spikes. - Ensure Explainability

Provide context for AI decisions. Example: show top features influencing a prediction. - Maintain Data Privacy

Don’t send sensitive user data to third-party APIs without anonymization. - Implement Feedback Loops

Allow users to rate AI outputs and retrain models for improvement.

Common Pitfalls to Avoid

- Vendor Lock-In: Relying solely on one AI provider can increase costs.

- Overfitting Models: Ensure training data is diverse enough for generalization.

- Ignoring Edge Cases: AI must handle “unknown” inputs gracefully.

- Underestimating Compute Costs: Inference at scale can become expensive.

- Skipping Security: Always secure AI endpoints against unauthorized use.

Real-World Examples

1. E-commerce Recommendation Engine

Amazon’s product suggestions are powered by AI recommendation systems. Integration with the web app enables real-time, personalized shopping experiences.

2. AI-Powered Chatbots

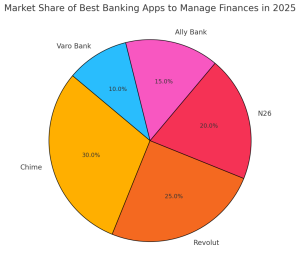

Banking apps integrate NLP models for 24/7 support. These bots answer FAQs, resolve queries, and escalate complex issues to humans.

3. Fraud Detection in Finance

FinTech apps integrate anomaly detection models with their transaction systems. Suspicious activities trigger instant alerts.

4. Content Moderation in Social Platforms

Platforms like Facebook and YouTube deploy computer vision + NLP models to filter harmful content before it reaches users.

Conclusion

Integrating AI models into web applications allows developers to build smarter, more personalized, and highly automated systems.

Key takeaways:

- Choose the right integration approach (API, SDK, custom deployment).

- Optimize for performance, scalability, and user experience.

- Don’t ignore monitoring, explainability, or compliance.

- Treat AI integration as a living system — continuously update and retrain.

Done well, AI integration transforms web apps from static tools into intelligent platforms that adapt and learn over time.

FAQs

1. Do I need deep ML expertise to integrate AI into web apps?

No. Many platforms (OpenAI, Google AutoML, Hugging Face) provide APIs and SDKs that simplify integration.

2. Can AI integration slow down my app?

Yes, if models are too large or infrastructure is weak. Use lightweight models and caching to improve performance.

3. Should I use pre-trained or custom models?

For general tasks (sentiment, translation), pre-trained models are fine. For domain-specific needs, fine-tuned/custom models work best.

4. How do I deploy AI models in production?

Common methods include Docker containers, Kubernetes, or managed services like AWS SageMaker.

5. How can I ensure ethical AI integration?

Use explainability tools, avoid biased training data, and be transparent with users about AI-driven features.

6. Can AI run directly in the browser?

Yes. Frameworks like TensorFlow.js and ONNX.js enable client-side inference for lighter models.