Introduction

The demand for faster software development and delivery has led to a dramatic shift in how testing is approached. In modern DevOps and agile environments, testing needs to be not only thorough but also automated, scalable, and intelligent. Traditional methods of writing unit and integration tests, however, are often time-consuming, prone to human error, and difficult to scale across large codebases.

This is where Artificial Intelligence (AI) is becoming a game changer. AI-powered tools can now assist in writing, maintaining, executing, and optimizing both unit and integration tests—turning testing from a manual burden into a strategic advantage.

In this comprehensive guide, we’ll explore:

- What unit and integration testing entails

- Why traditional approaches are no longer enough

- How AI enhances testing automation

- The best AI-powered tools available

- Real-world implementation strategies

- Challenges and how to overcome them

1. Understanding Unit and Integration Testing

What Is Unit Testing?

Unit testing involves testing the smallest testable parts of an application—typically functions or methods—to ensure they perform as expected in isolation. Unit tests are typically fast, automated, and run frequently during development.

Characteristics:

- Focus on a single function/class

- Use of mocks/stubs for dependencies

- Usually written by developers

- High volume, low complexity

Example:

pythonCopyEditdef add(a, b):

return a + b

def test_add():

assert add(2, 3) == 5

What Is Integration Testing?

Integration testing checks how multiple components work together. It ensures that interfaces between modules or external systems operate correctly, whether it’s between APIs, databases, or external services.

Characteristics:

- Covers data flow between modules

- May require real test environments

- Slower and more complex than unit tests

- Often part of post-commit test suites

Example Use Cases:

- A user service communicating with a payment gateway

- A front-end client integrating with a backend API

2. The Limitations of Traditional Testing

While automated testing is a best practice, writing and maintaining test cases comes with challenges:

Time-Intensive Test Creation

Developers often deprioritize test creation due to tight deadlines. Writing comprehensive tests manually is tedious and repetitive.

Incomplete Coverage

Manual test writing often misses edge cases or low-visibility code paths, especially in large and legacy systems.

Test Maintenance Overhead

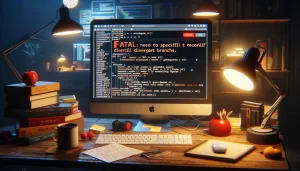

When code changes, existing tests break. Developers must spend time updating or rewriting tests—introducing delay and frustration.

Skills Gap

Not every developer is skilled in test writing, especially in areas involving mocking, stubbing, or integration workflows.

Fragile Tests

Integration tests can fail due to environment issues, flakiness in third-party APIs, or order dependencies.

3. How AI Transforms Unit and Integration Testing

AI for Unit Testing

- Code Analysis: AI scans source code, detects logical units, and auto-generates test cases.

- Test Suggestions: Provides likely assertions and corner cases based on code logic.

- Coverage Mapping: Identifies which branches or functions remain untested.

- Natural Language Descriptions: AI can generate human-readable explanations for what a test is validating.

AI for Integration Testing

- System Modeling: Understands interactions between services and suggests integration scenarios.

- Auto-Mocking: AI automatically generates mocks for external services.

- Dependency Mapping: Identifies service dependencies and recommends sequencing or stubbing logic.

- Self-Healing Tests: Updates test scripts as APIs or data schemas evolve.

4. Top AI Tools for Automated Testing

Here’s a look at some of the leading platforms using AI for test automation:

1. Diffblue Cover

- Language: Java

- Features: Automatically writes unit tests using static code analysis

- Use Case: Great for legacy Java applications lacking coverage

2. CodiumAI

- Language: Python, JavaScript, TypeScript

- Features: Suggests and validates test cases in real-time during coding

- Integrations: IDE plugins (VS Code, JetBrains)

3. OpenAI Codex / GitHub Copilot

- Language: Multi-language support

- Features: Suggests unit tests while writing production code

- Use Case: Quick generation of basic test scaffolding

4. TestRigor

- Focus: End-to-end and integration testing

- Features: Write tests in plain English; AI handles selectors and maintenance

- Best for: QA teams with limited scripting experience

5. Mabl

- Focus: Intelligent integration and UI testing

- Features: Visual testing, API testing, and auto-maintenance

- Integrations: CI/CD pipelines and web apps

6. Functionize

- AI-driven end-to-end testing with low-code/no-code support

- Offers self-healing scripts, auto-locators, and NLP test creation

5. Workflow: Automating Tests with AI in CI/CD

Here’s how a modern pipeline using AI for testing might look:

Step 1: Code Commit

Developer commits code to the repository. AI tooling (like CodiumAI or Copilot) suggests tests in real time.

Step 2: Test Generation

CI pipeline triggers an AI model to:

- Generate unit tests based on the diff

- Suggest integration tests for affected modules

Step 3: Dependency Identification

AI scans code to identify external service calls and generate mocks/stubs for them automatically.

Step 4: Test Execution

Tests run in CI pipeline:

- Unit tests for quick validation

- Integration tests on staging environments or containers

Step 5: Feedback Loop

- Failed tests feed back into AI model learning

- Test coverage reports highlight gaps

- Developers refine suggestions with minimal manual effort

6. Real-World Use Cases

A. Refactoring Legacy Code

Companies like banks, insurance firms, or industrial software vendors use tools like Diffblue to bring unit test coverage to 0%-covered legacy apps—automatically.

B. Rapid MVP Development

Startups use GitHub Copilot to auto-generate both unit and integration tests during active development, speeding up validation cycles.

C. CI/CD at Scale

Enterprises running 100+ microservices automate integration tests using Mabl and TestRigor, with real-time SLA checks and visual diffs.

7. Best Practices for AI-Based Test Automation

1. Treat AI as an Assistant, Not a Replacement

AI should augment developer productivity, not eliminate human oversight. Always review AI-generated test cases for accuracy.

2. Maintain Separate Unit and Integration Test Suites

Ensure that AI tools don’t blur the line between isolated and integrated tests. Maintain clarity in your pipeline.

3. Build AI Feedback Loops

Use historical test performance data to inform test case prioritization and reliability scoring.

4. Keep Tests Environment-Aware

Use context-aware AI models that understand deployment environments, API tokens, and staging setups.

5. Document AI-Generated Tests

AI-generated tests should be self-explanatory or come with descriptive summaries to improve developer onboarding and maintainability.

8. Challenges and Limitations

| Challenge | Solution |

|---|---|

| False Positives | Tune AI models, review suggestions |

| Test Redundancy | Use coverage tools to identify duplicates |

| Environment Flakiness | Use isolated containers, better mocks |

| Limited Language Support | Choose tools aligned with your tech stack |

| Security & Compliance | Validate AI suggestions for data handling and privacy |

9. The Future of AI in Test Automation

AI’s role in software testing is only expanding. Expect innovations in:

- Test impact analysis: Predict which tests matter for a given code change

- Autonomous test agents: AI bots that continuously write, run, and repair tests

- Production-Aware Testing: Tests generated based on real-world user behavior

- Natural Language QA: Describe what needs testing, and AI writes + validates the test

Conclusion

AI is no longer a novelty in software development—it’s a necessity. Especially in the area of unit and integration testing, AI delivers real, measurable benefits:

- Faster development cycles

- Higher test coverage

- Fewer bugs in production

- More time for developers to focus on core features

By adopting AI tools for automated testing, your organization gains a competitive advantage in quality, speed, and innovation.

Whether you’re a startup deploying weekly or an enterprise managing global microservices, the time to integrate AI into your testing strategy is now.

FAQs

Q1: Can AI fully replace human test engineers?

No. AI helps generate and maintain tests, but human insight is critical for edge cases, business logic, and exploratory testing.

Q2: Do AI tools support all languages?

Not yet. Most popular tools support major languages like Java, Python, JavaScript, and C#. Niche language support varies.

Q3: Can AI detect bugs without test cases?

Some tools use static analysis or fuzz testing to detect anomalies, but structured test cases are still essential.

Q4: Is AI-based testing suitable for regulated industries?

Yes—with careful validation. Industries like healthcare and finance benefit from the speed but must enforce auditability and compliance.

Q5: What’s the ROI of implementing AI-based testing?

Teams typically report 30–50% reduction in test development time and faster feedback in CI/CD pipelines.